Abstract:

This work demonstrates and presents an implementation procedure of Open Visual Inference and Neural Network Optimization (OpenVINO) toolkit distribution from Intel. AI inferencing is a essential capability of AI solution deployment, with the requirement of quick results and elegant visualization in a user-friendly fashion. The toolkit enables the options of optimizing, tuning and running comprehensive AI inferences using model optimizer and runtime and development tools of OpenVINO.

OpenVINO workflows provide the option of simplifying complex inference procedures with a single command. This generic implementation in Python codes enables users to test the power of OpenVINO either on a Google Colab or Jupyter Notebook.

Introduction:

Computer vision(CV) based Artificial Intelligence(AI) solutions are making a great impact on the automation of workflows for businesses across all industries at every process level. With solutions expected to grow multifold, training and inference remain computationally demanding with complexity and edge performance requirements increasing. Such a typical graphics-based AI process comprises the modules of architect, train, finetune, infer, deploy, and maintain along with hiring and training of skilled resources. Also, such solutions form cost-constraint barriers for small- and medium-sized players to justify their investment strategies. A low code approach would add great value towards ROI and cope with the competitive advantage race for industrial players.

Advantage of Low Code Approach

-

Improved agility: Multiple device compatibility.

-

Decreased costs.

-

Higher productivity

-

Better customer experience.

-

Effective risk management and governance.

-

Change easily.

With these low-code benefits, organizations are better equipped to quickly adapt and respond to fast-changing business conditions. OpenVINO toolkit provides a platform enabling to selection pre-trained, optimized AI inference models with a single click. Users can execute AI inference on multiple Intel-enabled devices, with plugins available to support heterogeneous architecture and requests in parallel. Users can quickly build software modules with AI algorithms in an intuitive drag-and-drop interface that includes popular frameworks like TensorFlow, PyTorch, and the OpenVINO toolkit.

When connected with a device management dashboard, prebuilt workflows on specific nodes or edge devices can be built with almost no code.

OpenVINO toolkit facilitates faster inference of deep learning models. Face detection using Intel OpenVINO is demonstrated in this article. For this detection, we used Intel's pre-trained object detection algorithms. The face detection algorithm network features a default MobileNet backbone.

Architecture

The Model Optimizer receives a pre-trained model. It improves the model and transforms it into its intermediate form (.xml and .bin file). The only format that the Inference Engine accepts and understands is the Intermediate form

The Inference Engine helps in the model's appropriate execution on many devices. It keeps track of the libraries needed to run the code on various systems.

These support synchronous and asynchronous requests. In Synchronous requests, each request is executed one after the other, whereas asynchronous requests are executed simultaneously by multiple executors.

OpenVINO also comes prebuilt with tools for supporting the creation and optimization of machine learning models such as Open Model Zoo, which is a repository of pre-trained models, Post-Training Optimisation Tool, which is a tool for model quantification, and Accuracy Checker, which is a tool for checking the accuracy of various ML models.

Uses of OpenVINO

-

Computer Vision inference on a variety of hardware platforms.

-

Models from PyTorch, TensorFlow, and other frameworks can be imported and optimised.

-

Extend the use of deep learning models beyond computer vision.

-

Takes less time for inference on CPUs when compared to TensorFlow

SYSTEM REQUIRMENTS

Hardware

6th-10th Generation Intel® Core™ processors

Intel® Xeon® v5 family

Intel® Xeon® v6 family

Intel® Movidius™ Neural Compute Stick

Intel® Neural Compute Stick 2

Intel® Vision Accelerator Design with Intel® Movidius™ VPUs

Operating System - Microsoft Windows* 10 64-bit

Software

Microsoft Visual Studio

CMake 3.4 or higher 64-bit

Python 3.6.5 or higher 64-bit

Installation

Download the latest version of OpenVINO for Windows from the link given below

https://www.intel.com/content/www/us/en/developer/tools/openvino-toolkit-download.html

Register with the email address after Operating system type, Distribution, Version Type and Installer type to start downloading the executable file.

Double-click OpenVino_toolkit_p_.exe The window similar to this will be visible

Click next and finish the first part of the installation.

Once the installation is completed you can see the files in the installed directory, by default it is

C:\Program Files (x86)\IntelSWTools\openvino_

Install Python 3.6 and

CMake support

Download the Community version of Microsoft Visual Studio* 2019 for the OpenVINO™ toolkit from the link given below.

Run the Visual Studio Community 2019 executable file.

Check the boxes as given below in Workloads and Individual components.

Now the installation of Microsoft visual studio with C++ is completed

Activate the OpenVINO environment by running the bat file as given below

C:\Program Files (x86)\Intel\openvino_\bin>setupvars.bat

After successful execution, a message similar to this will be displayed.

Converting the model to Intermediate Representation (IR)

Deploy TensorFlow Model

Train the TensorFlow model, and export the inference graph (savedmodel.pb). We can run Model Optimizer with the default options because the inference graph already contains all of the needed information about the model, such as the intended input shape and output node name

Run the mo_tf.py file in the model_optimizer folder

C:\Program Files (x86)\Intel\openvino_\deployment_tools\model_optimizer\> python mo_tf.py --input_model ssd_inception_v2_coco_2018_01_28/frozen_inference_graph.pb --tensorflow_object_detection_api_pipeline_config pipeline.config --reverse_input_channels --tensorflow_use_custom_operations_config \intel\openvino\deployment_tools\model_optimizer\extensions\front\tf\ssd_v2_support.json

The model optimizer file uses the input inference graph, pipeline config which was used for training and the ssd_v2_support.json file for the conversion.

If the conversion is successful, we will receive 'inference graph.xml' with the IR model description and 'inference graph.bin' with the model weights data.

Deploying other custom models

The Model Optimizer will automatically know what to do if a model uses a framework's standard extension (if not, use the —framework parameter to specify):

.caffemodel (Caffe)

.params( MXNet)

.onnx( ONNX)

.nnet (Kaldi)

C:\Program Files (x86)\Intel\openvino_\deployment_tools\model_optimizer\> python mo.py --input_model

PATH_TO_INPUT_MODEL --input_proto

Download Pre-trained Model

Several models are preloaded in OpenVINO and can be used right away. The majority of the networks use SSDs and offer reasonable accuracy/performance trade-offs when compared to the other available non-Intel pre-trained models.

Some of the pre-trained models are:

Object detection :

person-detection-action-recognition

pedestrian-detection

pedestrian-and-vehicle-detector

person-vehicle-bike-detection-crossroad

vehicle-license-plate-detection

Object recognition models:

age-gender-recognition

head-pose-estimation

emotions-recognition

gaze-estimation

Action Recognition Models:

driver-action-recognition

common-sign-language

Text To Speech :

text-to-speech-english

Time Series Forecasting:

time-series-forecasting-electricity

The complete list of models can be found on the following page.

https://docs.openvino.ai/latest/omz_models_group_intel.html

To download these models, the Open Vino model downloader script can be used

Run the downloader script in the tools directory to download the

detection models.

C:\Program Files (x86)\Intel\openvino_ \deployment_tools\open_model_zoo\tools\downloader>

python downloader.py --name face-detection-adas-0001 --output_dir

C:\Program Files (x86)\Intel\openvino_\deployment_tools\open_model_zoo\tools\downloader>

python downloader.py --name person-detection-retail-0013 --output_dir

The script that was used to download the models :

import sys

from pathlib import Path

sys.path.insert(0, str(Path(__file__).parent / 'src'))

import open_model_zoo.model_tools.downloader

if __name__ == '__main__':

open_model_zoo.model_tools.downloader.main()

Testing the models

Create a Python script to initialise the OpenVINO Inference Engine, load the IR model, and do inference on the provided data.

import cv2

#Now load the models

frame_text = “OpenVINO”

person_det = cv2.dnn.readNet('person-detection-retail.bin', 'person-detection-retail.xml')

face_model = cv2.dnn.readNet('face-detection-adas.bin', 'face-detection-ada.xml')

Intermediate representation (IR ) files (person-detection-retail.bin,face-detection-adas.bin,person-detection-retail.xml and face-detection-ada.xml) is loaded using cv2.dnn.ReadNet

data = cv2.VideoCapture("data.mp4")

data.mp4 file after converting the resolution, is sent as an input to the model for prediction.

while cv2.waitKey(1) < 0:

hasFrame, fps = data.read()

fps= cv2.resize(frame,(600,600),fx=0,fy=0, interpolation = cv2.INTER_CUBIC)

if not hasFrame:

break

face_data = cv2.dnn.blobFromImage(frame, size=(600, 300))

After mean subtraction, normalisation, and channel swapping, the cv2.dnn.blobFromImage function provides a blob, which is our input image.

face_model.set input(face_data)

face_output = face_model.forward()

for detection in face_output.reshape(-1, 7):

Face_threshhold= float(detection[2])

xmin = int(detection[3] * frame.shape[1])

ymin = int(detection[4] * frame.shape[0])

xmax = int(detection[5] * frame.shape[1])

ymax = int(detection[6] * frame.shape[0])

#####

if Face_threshhold> 0.8:

tf = 2

cv2.rectangle(frame, (xmin, ymin), (xmax, ymax), color=(255, 255, 255),thickness=tf)

cv2.putText(frame,'FACE',(xmin, (ymin-10)),cv2.FONT_ITALIC, 0.4,(255, 0, 0),1,cv2.LINE_AA)

person_data= cv2.dnn.blobFromImage(frame, size=(600, 300))

person_det.setInput(person_data)

person_output = person_det.forward()

#####

for detection in person_output.reshape(-1, 7):

Person_threshhold= float(detection[2])

xmin_person = int(detection[3] * frame.shape[1])

ymin_person = int(detection[4] * frame.shape[0])

xmax_person= int(detection[5] * frame.shape[1])

ymax_person = int(detection[6] * frame.shape[0])

########

if Person_threshhold > 0.8

tf = 2

cv2.rectangle(frame, (xmin_person, ymin_person), (xmax_person, ymax_person), color=(255, 255, 255),thickness=tf)

cv2.putText(frame,'Person',(xmin_person, (ymin_person)),cv2.FONT_ITALIC, 0.4,(255, 0,0 ),1,cv2.LINE_AA)

cv2.imshow(frame_text, frame)

RESULTS

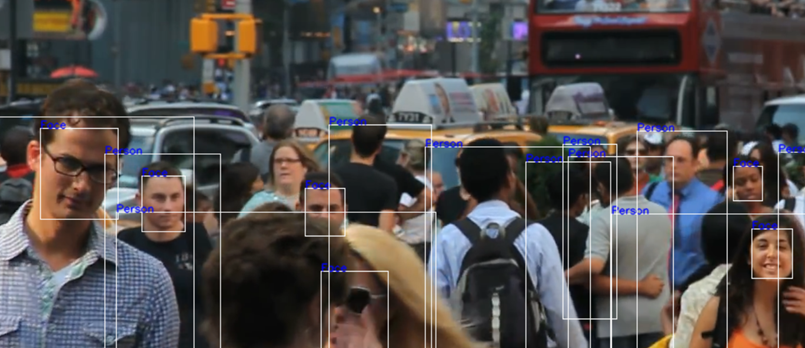

The output of the OpenVINO model can be seen here using imshow.

Conclusion

Computer vision applications are widely used, and developers can make them more robust and scalable by using the OpenVINO framework. We looked at how to run TensorFlow models in the OpenVINO Inference Engine environment. We've demonstrated that OpenVINO delivers a significant performance boost and allows us to execute even the most demanding Deep Learning algorithms on relatively average hardware.

Reference

- https://docs.openvino.ai/2019_R3/_docs_install_guides_installingopenvino_windows.html

- https://docs.openvino.ai/latest/omz_models_group_intel.html

- https://en.wikipedia.org/wiki/OpenVINO

- https://medium.com/analytics-vidhya/intel-openvino-on-google-colab-20ac8d2eede6

- https://docs.openvino.ai/latest/getstarted.html